Bird Box

Description as a Tweet:

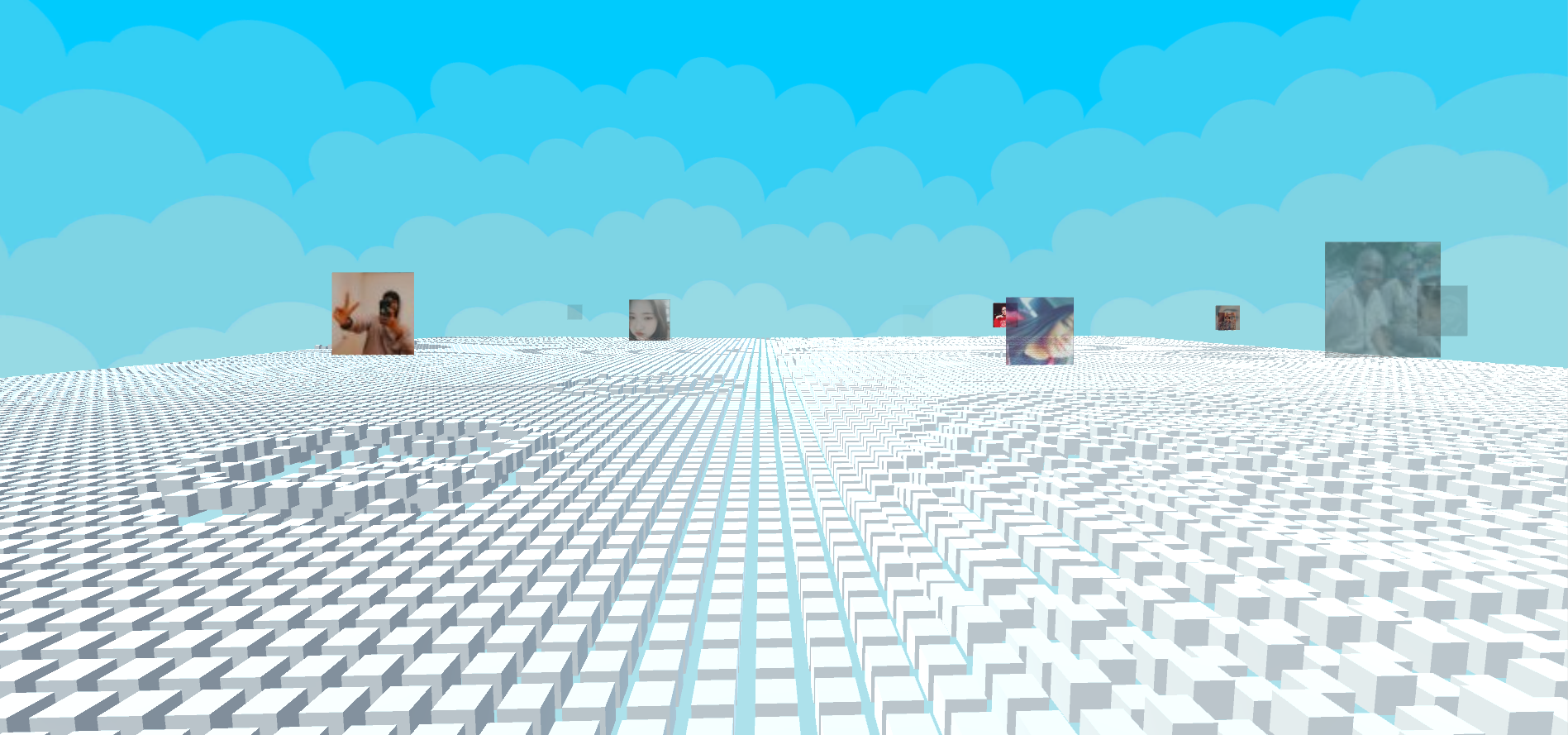

Our project turns tweets into...tweets. Live streamed tweet data generates bird calls from different species based on sentiment analysis and visual waves of varying size and speed based on their author's following.

Inspiration:

We were inspired by visualizations like Listen To Wikipedia and INSTRUMENT | One Antarctic Night, which both "visualize" data as a soundscape to create music! We researched the ways that Twitter in particular has distinct niches and tribes of users, and thought about how the social media ecosystem can also be thought of as a biological one. Although what we ended up focusing on for the hackathon didn't end up focusing on this area, future steps would involve tying these inter-user connections into the visualization.

What it does:

Our project streams tweet based on either general trending topics or user defined filters to generates bird calls from various species based on sentiment analysis and visual waves of varying size and speed based on their author's following.

How we built it:

We started off brainstorming and researching the different attributes that different APIs could provide and the ways that we could map those attributes to different encodings. We did a mini literature review of different papers and projects that explored similar ideas before diving into implementation. Some of us spent some time learning Unity, then we divided up the work into distinct components and started coding!

Technologies we used:

- C/C++/C#

Challenges we ran into:

Many of our most interesting ideas were too difficult from a complexity standpoint (i.e., requiring traversing the entire Twitter user graph, for example), so we had to focus on what we could accomplish in 36 hours. We also faced challenges integrating the relevant REST APIs into Unity and had performance issues, since we were manipulating thousands of objects to visualize the data. We originally wanted to build the experience for VR, but were unable to get a headset.

Accomplishments we're proud of:

We're proud that we were able to finish the project and minimize the scope of the project to make it manageable! We had a lot of ideas and different directions we could go in and could have easily gone too far and not been able to finish the core elements.

What we've learned:

We learned how to use Unity, think about data, and make pretty things!

What's next:

Next steps for the project might include expanding the breadth of attributes we're encoding, putting more thought and work into more advanced audio modulation, and exploring applications of the project in AR with geographical information and VR for immersion and interaction! Using the Indico API was a neat way to transpose raw tweets into usable data, but it would be more interesting to analyze the relationships between tweets as a whole to tie in both with the "wave" concept of social network impact and the "ecosystem" concepts of relating it back to birds and ecosystem dynamics.

Built with:

We used Unity3D to build the experience, Twitter's api to get data, and Indico's text analysis API.

Prizes we're going for:

No Prizes Chosen